The Strategy and Analysis team produce a range of analytical products as ilustrated in Figure 3.1 and the purpose and scope of each of these products is summarised in this section.

The default audience of analytical products is assumed to be interested and semi-informed on environmental topics. Mixed stakeholders including MSPs, public bodies, environmental non-governmental organisations (eNGOs).

Figure 3.1 Analytical products produced by SA team

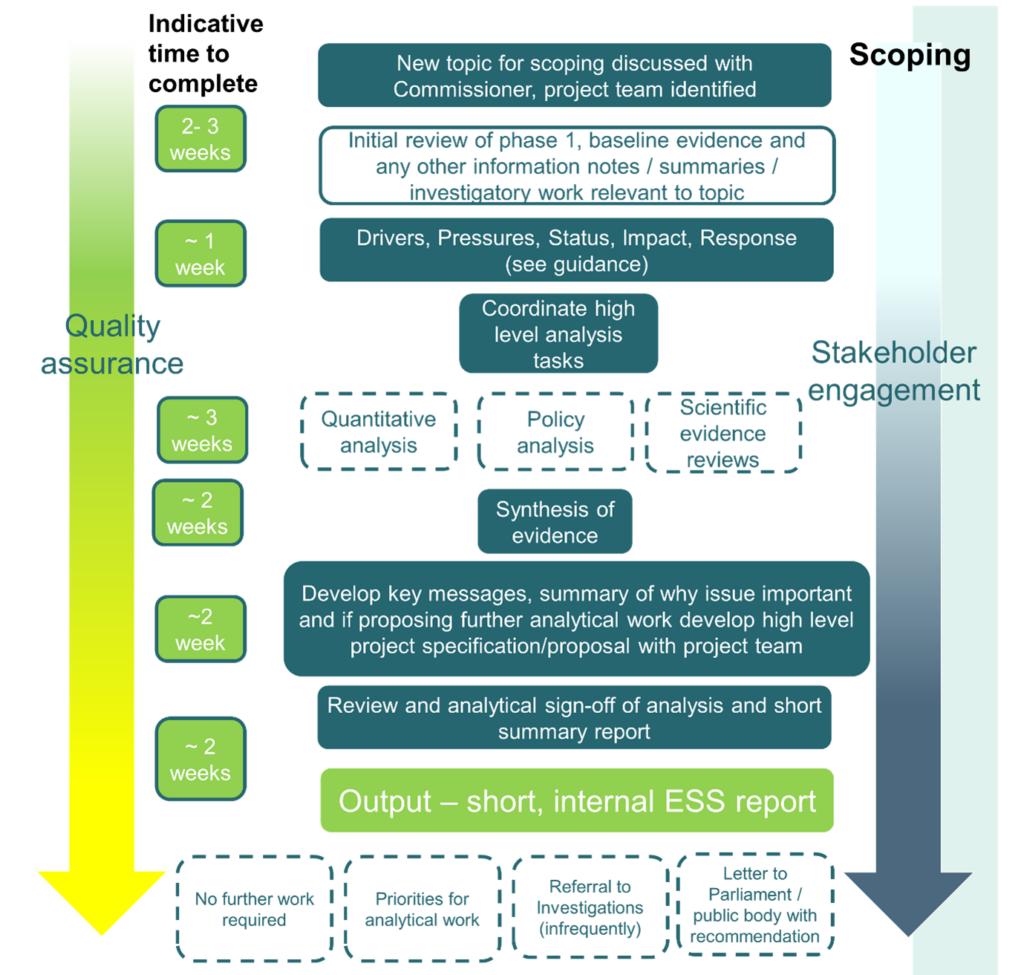

3.1 Scoping reports

Purpose. Broad but shallow review of topic leading to one of four possible outcomes (see below). If the outcome is that further analysis is required, scoping should identify the specific issues to focus on.

Audience. Internal. Unpublished.

Type of report. Short, summary report explaining why the topic is important (in a page with links to other sources) and then some visuals (e.g. legislation/policy map, DPSIR summary, data sources, trends). Set out next focus with information to explain why topics not included to manage risk. If proposing further analytical work this should include project specification/proposal to give a sense of what resource would be required. While formal theories of change are developed alongside recommendations there should be consideration of how ESS is likely to be able to add value through changing behaviour or processes at an earlier stage of the work.

Out of scope. Budget (unless scoping commissioned out), communications – lines to take etc. ESS’ legal advisor should be consulted at the outset and updates should be provided to Corporate Services and Communications (CSC) and ISC though quarterly C band meetings.

Key roles. Small project team to discuss key findings and next steps. Commissioner – One of C1 for Policy, Science or Quantitative Analysis. Analytical coordinator – mostly at B band.

Duration. 2-3 months.

Quality assurance and fact checking. Light touch quality assurance of references. No external fact-checking.

External engagement. Yes. To understand what stakeholders are concerned about and where the risks are. However, stakeholder views should not drive priorities unless supported by evidence.

Board/ET engagement. Updates on progress and conclusions provided through SA update papers for information only. Decisions on next steps lie with Head of SA.

Possible outcomes. 1) No further action. 2) Further detailed analysis of one or more (prioritised) topics. 3) Issue passed to investigations (occasional). 4) Issue raised by letter with Parliament or a public authority (occasional).

3.2 Legislative Rapid reviews

Purpose. Horizon scanning.

Audience. Internal. Unpublished.

Type of report. Legislative rapid review.

Out of scope. Budget (unless scoping commissioned out), communications – lines to take etc. Detailed legal advice generally not required but may be sought on more complex areas and updates should be provided through quarterly C band meetings.

Key roles. Commissioner – Head of Policy Analysis and Horizon Scanning. Analytical coordinator/lead analyst – B band from policy team.

Duration. 2-4 weeks.

Quality assurance and fact checking. Light touch quality assurance within policy team. No external fact-checking.

External engagement. Not normally required.

Board/ET engagement. Updates on progress provided through SA update papers for information only.

Possible outcomes. None. To provide background/context for other analytical products.

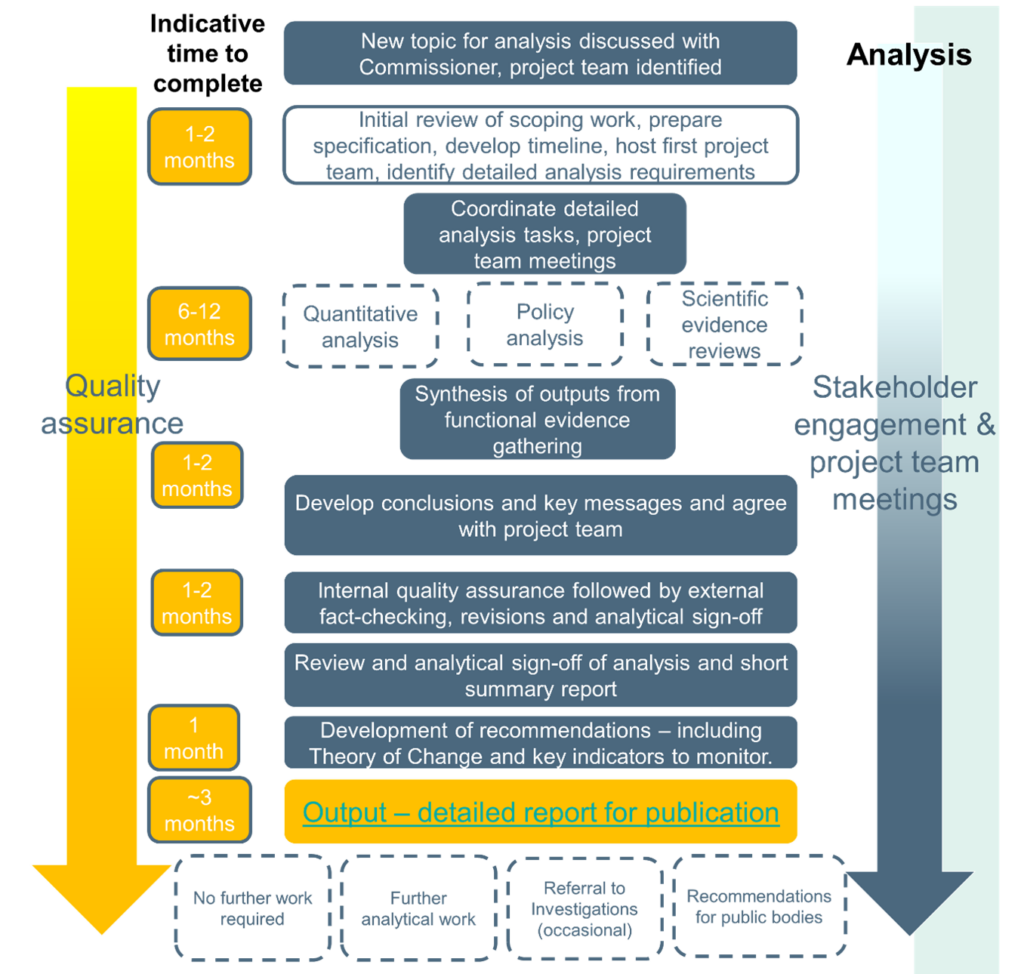

3.3 Analytical reports

Purpose. In-depth analysis of a defined, narrow topic. Will always follow scoping work, and the area of environmental law and focus (compliance and/or effectiveness) should be clear.

Audience. External published.

Type of report. Analytical report, synthesising evidence from a range of sources (quantitative, science, policy) and reaching conclusions relating to compliance with and efficacy of environmental law.

Scope. Most will not require a budget, but the option should be available. Communications and legal advice should be sought at outset and then at key stages in the process.

Key roles. Full project team with regular meetings and input on key findings and conclusions. Commissioner – One of C1 for Policy, Science or Quantitative Analysis. Analytical coordinator/lead analyst – mostly at B3 level.

Duration. Varies but likely to be at least six months and often a year or more.

Quality assurance and fact checking. Full internal quality assurance approach. External fact-checking.

External engagement. Yes. Detailed stakeholder engagement. However, stakeholder views should not drive priorities unless supported by evidence

Board/ET engagement. Detail of specification shared at start for information. Nominated Board members engaged at key points. Updates on progress and conclusions provided through SA update papers to ET and Board. Role in agreeing final recommendations (if any).

Possible outcomes. 1) No further action. 2) Further or ongoing analysis of one or more topics (prioritised). 3) Recommendations made to public authorities. 3) Issue passed to investigations (occasional).

3.4 Consultation responses/call for evidence responses

Purpose. To provide high quality analysis to inform ESS’ position in response to a consultation or call for evidence.

Audience. External published.

Type of report. Consultation response.

Scope. Generally, does not require a budget. Legal and communications updates should be provided and advice sought as appropriate.

Key roles. Commissioner – Head of Policy Analysis and Horizon Scanning. Analysis delegated as required. Lead analyst and a second quality assurance (QA) role normally sufficient though more roles may be included if multi-team response.

Duration. 4-6 weeks.

Quality assurance and fact checking. Within-team quality assurance though may need fuller approach if consultation requires a multi-team response. No external fact-checking.

External engagement. Not normally required though often part of wider engagement on associated topic.

Board/ET engagement. CEO sign-off. Board engagement if significance factors apply. Updates on progress and conclusions provided through SA update papers to ET and Board.

Possible outcomes. ESS response to consultation questions. Potential oral evidence sessions with Scottish Parliament Committees.

3.5 Investigations support

Purpose. To provide high quality, in-depth analysis of a narrow topic to support an investigation or pre-investigation.

Audience. Internal reports, potentially contributing to eventual external investigation report.

Type of report. Templates for analysis from individual teams e.g. quantitative analysis, scientific evidence review or policy analysis. Occasionally may be required to synthesise multi-team response.

Scope. Generally, does not require a budget unless commissioned out. Legal advice if appropriate.

Key roles. Commissioner – Head of Investigations, Standards and Compliance (ISC). Analytical coordinator – Identified within SA by C2. Project team depends on complexity. If single team response, then informal team of commissioner, coordinator and assurer normally sufficient. Multi-team response may require more formal team.

Duration. Variable.

Quality assurance and fact checking. Within-team quality assurance though may need fuller approach if consultation requires a multi-team response. No external fact-checking.

External engagement. Not normally required although may feed into requests made of stakeholders by the investigations team.

Board/Executive Team(ET) engagement. As per ISC own ET/Board engagement on wider Investigation. Updates on progress and conclusions provided through SA update papers to ET and Board.

3.6 Advice and Briefing notes

Purpose. To provide high quality analysis in response to Board/ET requests.

Audience. Generally internal reports but potential to be published in some cases.

Type of report. Likely to be based on templates for analysis from individual teams e.g. quantitative analysis, scientific evidence review or policy analysis. However, format may change depending on scope of request. Occasionally may be required to synthesise multi-team response. May feed into Board/ET papers.

Scope. Generally, does not require a budget. Legal advice should be sought if appropriate. Communications advice should be sought if to be published.

Key roles. Commissioner – any part of ESS. Analytical coordinator identified by Head of SA. Need for project team depends on complexity. If single team response, then informal team of commissioner, coordinator and assurer normally sufficient. Multi-team response may require more formal team.

Duration. Variable.

Quality assurance and fact checking. Within-team quality assurance though may need fuller approach if requires a multi-team response. Generally, no external fact-checking but may be required if report intended for publication.

External engagement. Not normally required but depends on scope of request.

Board/ET engagement. Detail will vary and this should be agreed with Head of SA.

3.7 Post-intervention analysis – in development

As ESS matures, we anticipate the requirement for post-intervention analysis on an environmental topic to examine if the situation has changed. Our process for this is in development. This section of the process guidance will be updated once this methodology is agreed.

3.8 Long-term outcome indicators – in development

In the first Strategic Plan ESS set out its long-term outcome: “Scotland’s people and nature benefit from a high quality environment and are protected from harm”. The plan indicates that this will be monitored via ESS’ assessment of Scotland’s progress against environmental indicators. Development work is underway to establish a suitable methodology for measuring progress. This section of the process guidance will be updated once this methodology is agreed.